Consumers searching for information today expect Google to display a wide variety of information from a number of sources in multiple formats. SEO professionals must understand intricacies of Google’s algorithm and identify the best way to make their content rank higher on the organic search results.

Google has published a new guide to search ranking systems that lists the major algorithms deployed over the years that currently drive Google Search rankings.

Core ranking system

This is the single most important algorithm that determines where a web page ranks on organic Google Search results. SEOs often cite 100+ ranking factors that determine a website’s position on Google SERPs – they’re usually talking about the core ranking system. It was created when Google was founded, and has evolved very significantly since then.

At its heart is a scoring system that evaluates the meaning of a searcher’s query, the relevance of your page to that query, and then the quality, usability, and the context and settings for the user. Pages are ranked based on their combined “score” for all the ranking criteria.

Often new algorithms are launched, tested, and if Google determines the algorithm is successful in its objective it gets incorporated into the core ranking system. Infamous older systems including Panda and Penguin have been assimilated into the core ranking system over time.

What you need to know:

The basic principles of SEO haven’t changed in all these years: good quality, unique, informative and relevant content from authoritative sources will still rank highest.

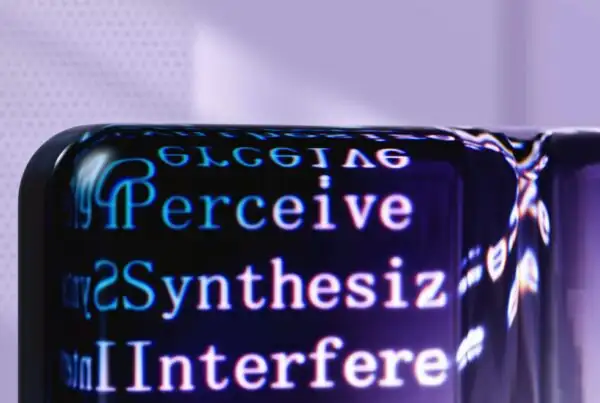

Natural language processing

A number of recent systems created by Google focus on the ability to understand language as spoken naturally by humans, and process it to match search queries and online content with the correct context. These systems go far beyond the early days of search, when ranking was based largely on keyword matching, and instead aim to understand search intent and true relevance.

Examples where NLP comes into play range from understanding all the different ways people may refer to shoes – footwear, sneakers, boots, moccasins, loafers, trainers – to being able to differentiate users searching to buy a wristwatch from those looking to watch movies online, even when they search on a query as ambiguous as ‘watch online’.

Systems that tackle these challenges for Google Search include:

- BERT

- Neural matching

- Passage ranking system

- RankBrain

- MUM

What you need to know:

It’s not at all necessary to match keywords to queries and ensure you have pages for every variation of your target keywords. Google’s systems are intelligent enough to detect contextual relevance almost the same way that a human would. It is much more important to invest resources in creating original and informative content for SEO.

User experience grading

Ever since page speed and mobile friendliness became ranking factors, Google has continued to drive webmasters and SEO practitioners to improve their user experience. In recent years, Google has started showing core web vitals within Search Console and the PageSpeed Insights tool. The main metrics in this report – largest contentful paint, first input delay – soon to be replaced by interaction to next paint (INP) in 2024, and cumulative layout shift indicate how fast and how user friendly a site is.

Added to that, site security has also become an important ranking factor. Websites that don’t use HTTPS are likely to struggle to outrank secure websites with similar relevance and authority.

Beyond speed and security, Google also considers originality and reliability as significant factors that impact their user’s experience on the SERPs. Most recently, the September 2023 Helpful Content Update adjusted ranking systems for AI-generated content, and cracked down on third-party content hosted on subdomains or within large websites. Systems that tackle user experience challenges for Google Search include:

- Page experience system

- Helpful content system

- Deduplication system

- Original content system

- Reliable information systems

What you need to know:

Using HTTPS and doing everything you can to speed up your site is obviously important. Webmasters should follow suggestions provided on the PageSpeed Insights tool to improve their user experience as much as possible.

Beyond that, ensuring content on your site is unique and actually helpful goes a long way. Cannibalising your own content, especially through duplication, is a problem, and it should be avoided. Trust should be built up by consistently fact-checking content, and even employing authors who have a good reputation.

Spam detection

Ever since organic search rankings were identified as an important source of traffic, unscrupulous SEOs have tried to game Google’s algorithm to rank their own or their client’s sites higher. Google employs a web spam team specifically to combat content and link spam.

Systems that tackle spam-related challenges for Google Search include:

- Spam detection systems

- Link analysis system

- PageRank

- Deduplication system

- Site diversity systems

- Exact match domain system

- Removal-based demotion systems

What you need to know:

The original Jagger, Penguin and Panda updates, and their subsequent versions have become extremely effective at detecting, and ignoring or even penalising sources and users of SEO spam techniques. These systems identify link exchanges, paid links, link farms, “thin content“, and not only ignore links that aren’t perceived to be high quality, editorial, organic links, but also penalise websites that are consistently found to procure such links.

It is important for SEO to invest in growing website authority through high quality link growth – typically via digital PR – and to also actively disavow spam links pointing to the site.

Special algorithms

During exceptional circumstances, such as national calamities, global pandemics, and even significant local events, searchers turn to Google for information that ranges from seeking news to finding helplines. Google has developed special algorithms specifically to handle such queries. Systems that tackle unusual challenges for search include:

- Crisis information system

- Local news system

What you need to know:

Most commercial websites don’t need to concern themselves with these systems. Google takes great care to show reliable, vetted sources of information and proactively works to identify source of misinformation and either supress them or highlight them as unreliable sources.

If you do SEO for a charity or national / global body that specifically deals with such challenges, it is important to understand these specific algorithms, and you might need to work directly with Google to ensure the correct information is made available to people in need.

Read the full guide to Google Search ranking systems

About the Author

Farhad is the Group CEO of AccuraCast. With over 20 years of experience in digital, Farhad is one of the leading technical marketing experts in the world. His specialities include digital strategy, international business, product marketing, measurement, marketing with data, technical SEO, and growth analytics.